LangChain och LangGraph LangChain and LangGraph

are two framework/library families aimed at developing applications

with support for large language models (LLM). LangChain has a wide range

of drivers. drivrutiner for most of the market of language models and services related to them

from a large number of vendors or locally deployed models. Lanchchain

implements a concept of linear, sequential workflows, e.g. if a task is

to be performed with agents step-by-step: A → B → C (Agent A to C).

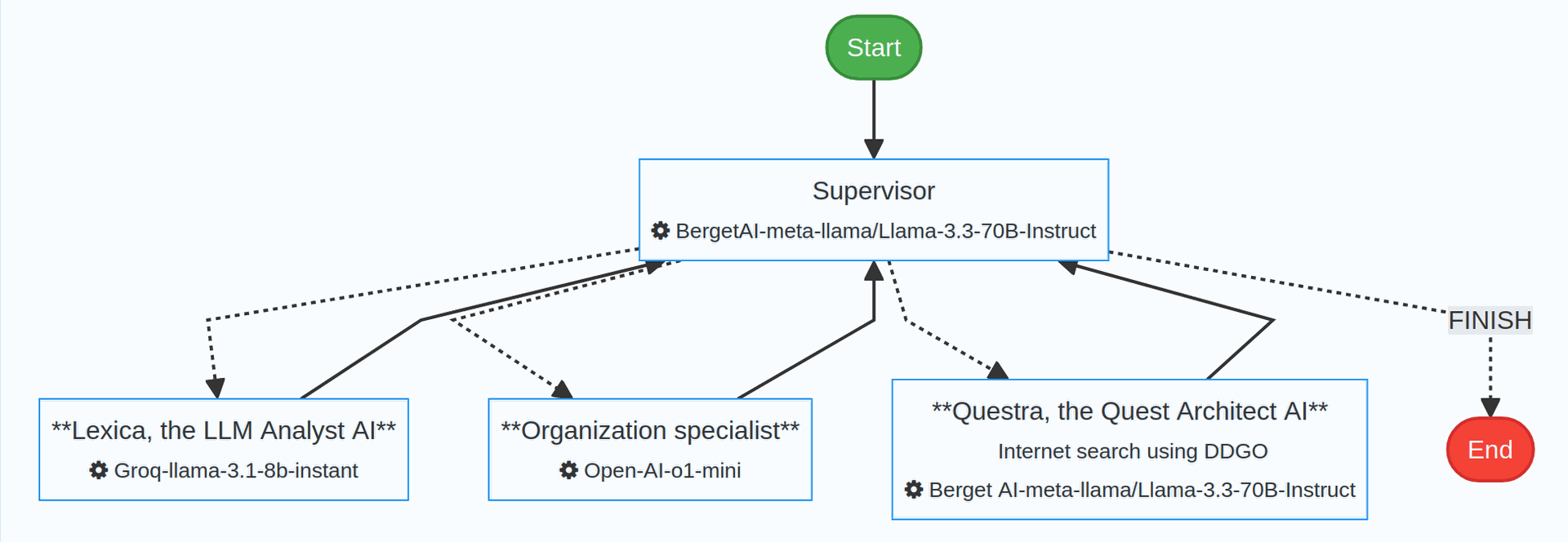

LangGraph

builds on LangChain and is a framework for creating more advanced,

state-based, and nonlinear workflows with AI agents . LangGraph

builds

complex collaborations between agents led by a supervisor. The area of

use is applications where decisions are not linear, but can have

multiple possible paths and feedbacks in a complex AI system.

Long-term memory (RAG) and tools can be linked to the agents. Tools can be retrieved from LangChain-biblioteken and used by configuring new tools. It is also possible to create your own tools in the form of new modules. Most major language models support using tools. However, long-term memory must be implemented as modules, current FAISS and PgVector implemented.

AI orchestration uses dynamic loading of libraries and drivers, meaning that only the libraries required for the application need to be installed on the server. This would otherwise require a large number of installed libraries, which could potentially conflict with each other, providing a lean and flexible solution.

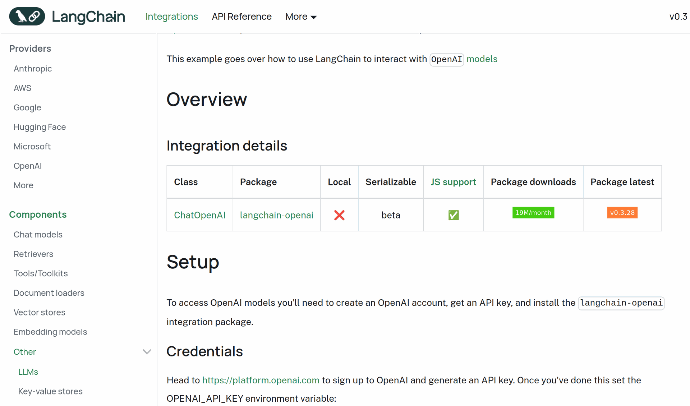

Information about the provider Open AI

In this example, we will retrieve information about Open AI. Find out the class (ChatOpenAI) and library (langchain-openai).

Create an account with Open AI and obtain an API key.

Here is a list of all drivers https://python.langchain.com/docs/integrations/providers/all/

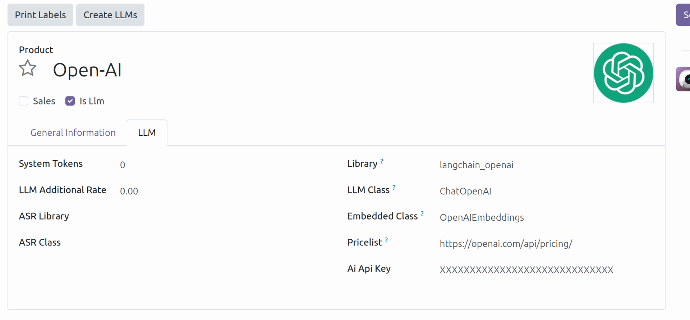

Create/update supplier in Odoo

Specify library (langchain_openai, note that the hyphen in this context is an underscore) class (ChatOpenAI and OpenAIEmbeddings) and API key.

Install the library on the server.

sudo pip3 install -r langchain-openai --ignore-installed --break-system-packages

Note that the library must be installed centrally (sudo) , otherwise the Odoo server will not find the library. The "break-system-packages" flag tells us that we are aware that the library is installed centrally and can be used by the entire server.

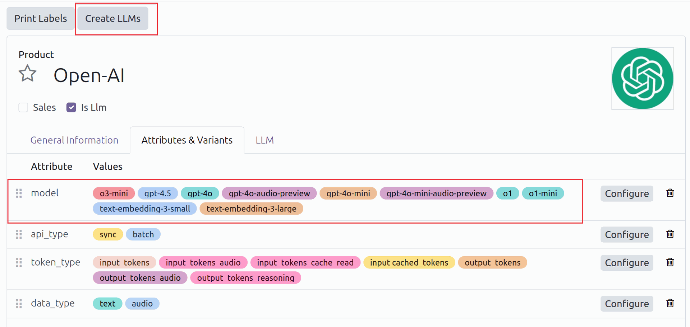

Model is an attribut

The supplier's models are represented by attributes on the product, which in turn form the basis for creating language models in Odoo.

Use the "Create LLMs" button to create language models that can be used in agents.

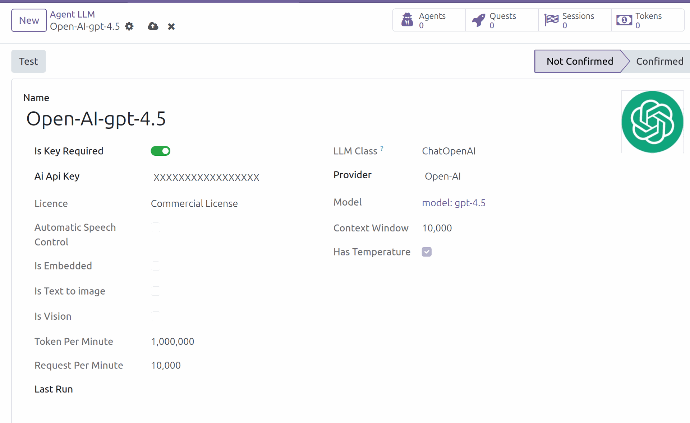

The language model in Odoo

The language model used by the agents. The model must be "Confirmed" to be selected as an agent. Use the Test button to verify that the model works

The API key is copied to the language model from the provider, there is also a function that can retrieve the key. The API key is stored individually on the respective model.

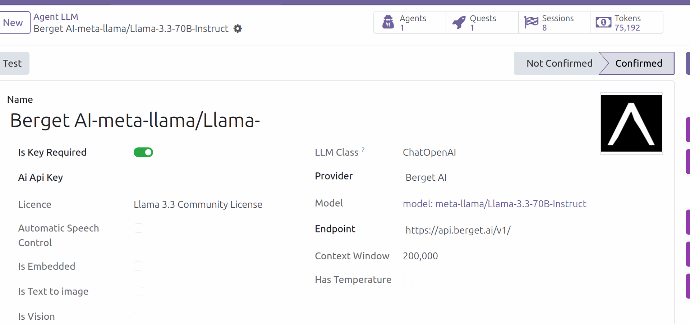

Alternative endpoints

By specifying an alternative endpoint, other providers or local servers can be used. Many providers use the Open AI protocol and then the library and class can be used for Open AI, but with a different endpoint, you use that service instead of Open AI's.

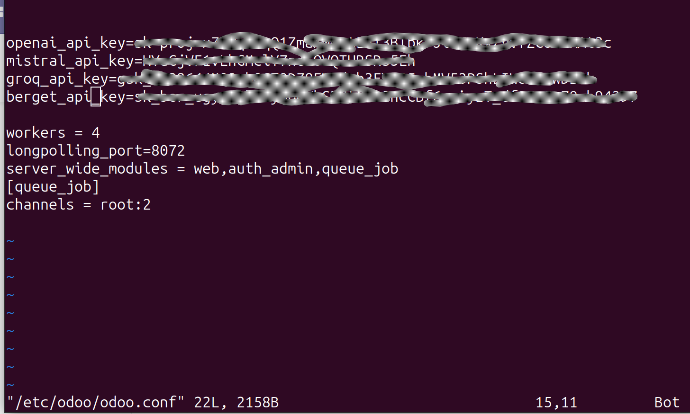

Global API keys in odoo.conf

API keys can also be configured centrally in /etc/odoo.conf. This allows all Odoo instances to access the key. The key can be overridden with a local configuration.

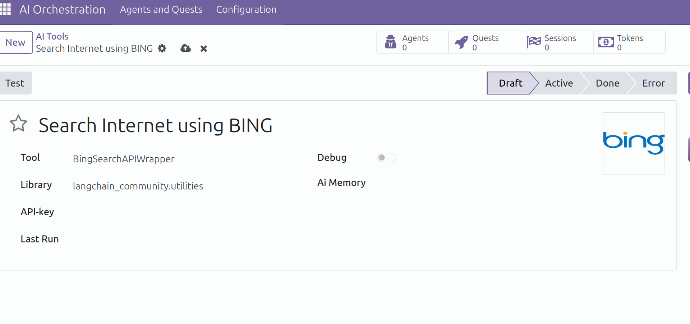

Create tools

To create a utility; specify class (BingSearchAPIWrapper) and library (lanchain_commnuity.utilities)